Revisiting Derivatives#

Your review for derivatives is that the exact derivative is as follows,:

\(\frac{df}{dx}=\lim_{\Delta x\rightarrow0}\frac{f(x+\Delta x)-f(x)}{\Delta x}\)

where \(f=f(x)\). In your lessons on initial value problems, you approximated this derivative by removing the \(\lim_{\Delta t \rightarrow 0}.\) When you describe derivatives over space, you use the same approximation, but in this notebook you will try a few other alternatives. The following approximation is called a forward difference derivative,

\(\frac{\Delta f}{\Delta x}\approx\frac{f(x+\Delta x)-f(x)}{\Delta x},\)

because you approximate \(\frac{df}{dx}\) using the current \(f(x)\) and the forward step, \(f(x+\Delta x)\) as the derivative.

Taylor Series Expansion#

The approximation of the truncation error in the function near \(x_{i}\) is given as:

\(f(x_{i+1})=f(x_{i})+f'(x_{i})h+\frac{f''(x_{i})}{2!}h^2+...\)

where \(f'=\frac{df}{dx}\) and \(x_{i+1} = x_{i}+h.\)

You determine the first order derivative by solving for \(f'(x_{i})\):

\(f'(x_{i})=\frac{f(x_{i+1})-f(x_{i})}{h}-\frac{f''(x_{i})}{2!}h+...\)

The truncation error error is on the order of the timestep, \(h\). This is commonly represented in big-O notation as \(error\approx O(h)\)

\(f'(x_{i})=\frac{f(x_{i+1})-f(x_{i})}{h}+O(h)\)

Higher order derivatives#

You have already solved first-order numerical derivatives problems in Project_01 and CompMech03-IVPs. Now, you will look at higher order derivatives. Let’s start with \(\frac{d^2f}{dx^2}=f''(x)\). We need more information.

First, take the function near \(x_{i}\) within 1 step, \(h\), given as:

\(f(x_{i+1})=f(x_{i})+f'(x_{i})h+\frac{f''(x_{i})}{2!}h^2+...\)

Next, take the function near \(x_{i}\) within 2 steps, \(2h\), given as:

\(f(x_{i+2})=f(x_{i})+f'(x_{i})2h+\frac{f''(x_{i})}{2!}4h^2+...\)

solving for \(f''(x_{i})\) by subtracting \(f(x_{i+2})-2f(x_{i+1})\) to eliminate \(f'(x_{i})\):

\(f''(x_{i})=\frac{f(x_{i+2})-2f(x_{i+1})-3f(x_{i})}{h^2}+O(h)\)

Here you have the numerical second derivative of a function, \(f(x)\), with truncation error of \(\approx O(h)\)

Using your numerical derivatives#

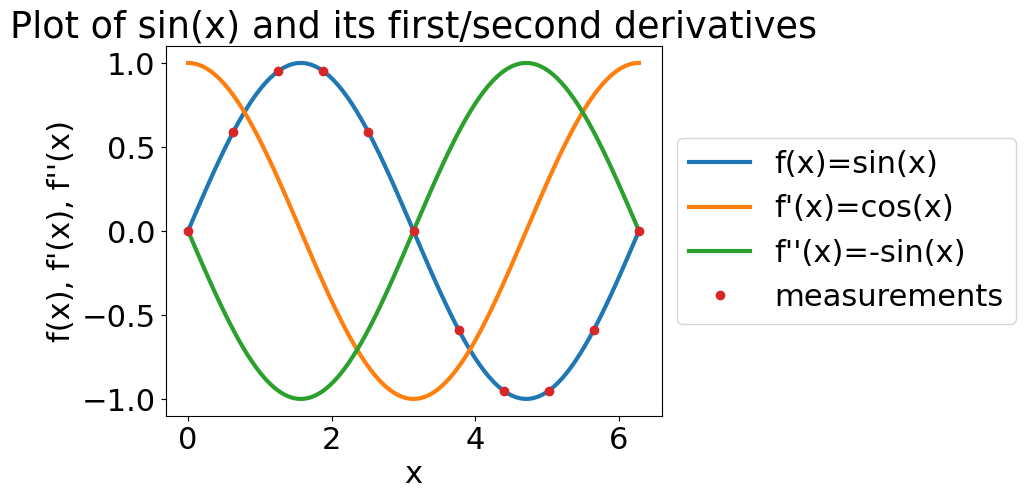

Consider the example of taking the derivative of \(f(x) = \sin(x)\) with only 10 data points per period. Let’s assume there is no random error in the signal. First, you can plot the values you expect since you know the derivatives of \(\sin(x)\).

import numpy as np

import matplotlib.pyplot as plt

plt.rcParams.update({'font.size': 22})

plt.rcParams['lines.linewidth'] = 3

x=np.linspace(0,2*np.pi,11)

xx=np.linspace(0,2*np.pi,100)

## analytical derivatives

y=np.sin(x)

dy=np.cos(xx)

ddy=-np.sin(xx)

plt.plot(xx,np.sin(xx),label='f(x)=sin(x)')

plt.plot(xx,dy,label='f\'(x)=cos(x)')

plt.plot(xx,ddy,label='f\'\'(x)=-sin(x)')

plt.plot(x,y,'o',label='measurements')

plt.legend(bbox_to_anchor=(1,0.5),loc='center left')

plt.title('Plot of sin(x) and its first/second derivatives')

plt.xlabel('x')

plt.ylabel('f(x), f\'(x), f\'\'(x)');

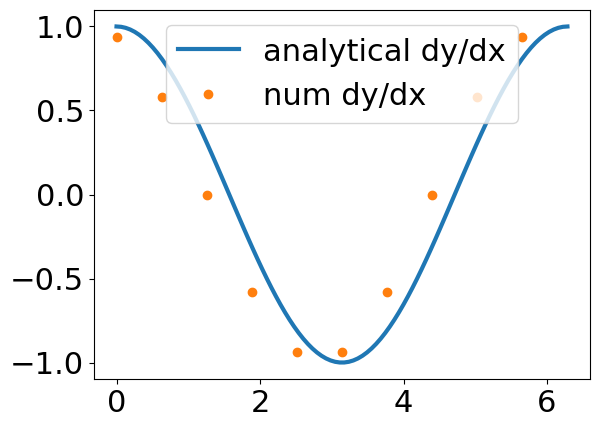

Next, you use your definitions for first and second derivatives to get the approximate derivatives, \(f'(x)~and~f''(x)\). You are using a forward difference method so for \(f'(x)\) you truncate the values by 1 and for \(f''(x)\), you truncate the values by 2.

## numerical derivatives

dy_n=(y[1:]-y[0:-1])/(x[1:]-x[0:-1]);

ddy_n=(y[2:]-2*y[1:-1]+y[0:-2])/(x[2:]-x[1:-1])**2;

plt.plot(xx,dy,label='analytical dy/dx')

plt.plot(x[:-1],dy_n,'o',label='num dy/dx')

plt.legend();

Exercise#

What is the maximum error between the numerical \(\frac{df}{dx}\) and the actual \(\frac{df}{dx}\)?

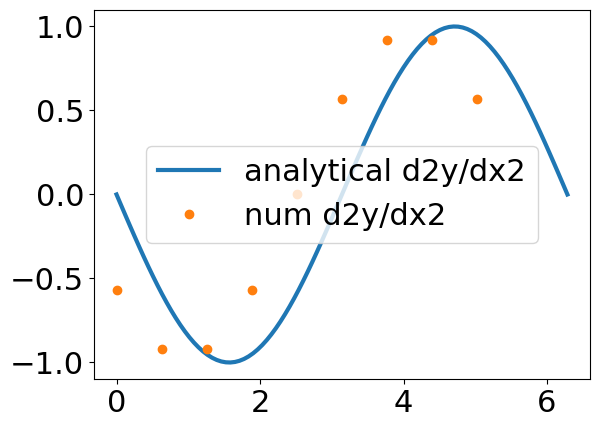

plt.plot(xx,ddy,label='analytical d2y/dx2')

plt.plot(x[:-2],ddy_n,'o',label='num d2y/dx2')

plt.legend();

Your stepsize is \(h=\pi/5\approx 0.6\). Looking at the graphs, it looks like you are shifting your function to the left by using this forward difference method. That is because you are using a forward difference method, the derivative is going to be averaged between step \(i\) and \(i+1\). The result is that you shift the function by \(h/2\) for each derivative.

Exercise#

Another first-order, \(error\approx O(h)\), derivative is the backward difference derivative, where

\(f'(x_{i})=\frac{f(x_{i})-f(x_{i-1})}{h}+O(h)\)

\(f''(x_{i})=\frac{f(x_{i})-2f(x_{i-1})+f(x_{i-2})}{h^2}+O(h)\)

Plot the first and second derivatives of \(\sin(x)\) using the same x-locations as you did above.

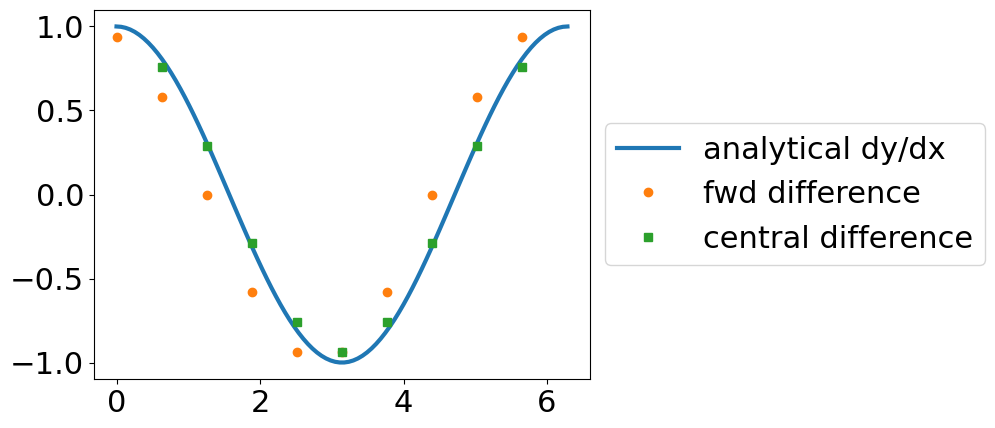

Central Difference#

Increase accuracy with more points#

Both the forward and backward difference methods have the same \(error\approx O(h)\), but you can do better. Let’s rewrite Taylor series of the function, \(f(x)\), near \(x_{i}\) is given as:

forward:

\(f(x_{i+1})=f(x_{i})+f'(x_{i})h+\frac{f''(x_{i})}{2!}h^2-\frac{f'''(x_{i})}{3!}h^3+...\)

backward:

\(f(x_{i-1})=f(x_{i})-f'(x_{i})h+\frac{f''(x_{i})}{2!}h^2-\frac{f'''(x_{i})}{3!}h^3+...\)

Now, you subtract \(f(x_{i+1})-f(x_{i-1})\), then solve for \(f'(x_{i})\), as such

\(f'(x_{i})=\frac{f(x_{i+1})-f(x_{i-1})}{2h}+O(h^{2}).\)

The result is that the truncation error has been reduced to \(\approx O(h^2).\) Take a look at the new function. The derivative is based upon the two closest points, \(x_{i-1}~and~x_{i+1}\), but not \(x_{i}\).

This approximation is called the central difference method. You can also apply it to second derivatives as such

forward:

\(f(x_{i+1})=f(x_{i})+f'(x_{i})h+\frac{f''(x_{i})}{2!}h^2-\frac{f'''(x_{i})}{3!}h^3+...\)

backward:

\(f(x_{i-1})=f(x_{i})-f'(x_{i})h+\frac{f''(x_{i})}{2!}h^2-\frac{f'''(x_{i})}{3!}h^3+...\)

Finally, you add \(f(x_{i+1})+f(x_{i-1})\) to eliminate \(f'(x_i)\) and solve for \(f''(x_i)\) as such

\(f''(x_{i}) = \frac{f(x_{i+1})-2f(x_{i})+f(x_{i-1})}{h^2} + O(h^2).\)

x=np.linspace(0,2*np.pi,11);

## analytical derivatives

y=np.sin(x);

dy=np.cos(xx);

ddy=-np.sin(xx);

## forward difference

dy_f=(y[1:]-y[:-1])/(x[1:]-x[:-1]);

## central difference

dy_c=(y[2:]-y[:-2])/(x[2:]-x[:-2]);

plt.plot(xx,dy,label='analytical dy/dx')

plt.plot(x[:-1],dy_f,'o',label='fwd difference')

plt.plot(x[1:-1],dy_c,'s',label='central difference')

plt.legend(bbox_to_anchor=(1,0.5),loc='center left');

Exercise#

Plot the analytical, forward difference, and central difference results for the second derivative of \(\sin(x)\).

Higher order derivatives#

You use the following chart for commonly found derivatives and the corresponding Forward, Backward, and Central difference approximations. In practice, you should use central difference approximations because they reduce the truncation error from \(O(h)\rightarrow O(h^2)\) without using more data points to calculate the derivative.

Table of derivatives and the corresponding forward, backward, and central difference method equations.

| Derivative | Forward \(O(h)\) | Backward \(O(h)\) | Central \(O(h^2)\) |

|---|---|---|---|

| \(\frac{df}{dx}\) | \(\frac{f(x_{i+1})-f(x_i)}{h}\) | \(\frac{f(x_{i})-f(x_{i-1})}{h}\) | \(\frac{f(x_{i+1})-f(x_{i-1})}{2h}\) |

| \(\frac{d^2f}{dx^2}\) | \(\frac{f(x_{i+2})-2f(x_{i+1})+f(x_{i})}{h^2}\) | \(\frac{f(x_{i})-2f(x_{i-1})+f(x_{i-2})}{h^2}\) | \(\frac{f(x_{i+1})-2f(x_{i})+f(x_{i-1})}{h^2}\) |

| \(\frac{d^3f}{dx^3}\) | \(\frac{f (x_{i+3} ) − 3 f (x_{i+2} ) + 3 f (x_{i+1} ) − f(x_{ i} )}{h^3}\) | \(\frac{f (x_i ) − 3 f (x_{i−1} ) + 3 f (x_{i−2} ) − f (x_{i−3})}{h^3}\) | \(\frac{f (x_{i+2} ) − 2 f (x_{ i+1} ) + 2 f (x_{i−1} ) − f (x_{i−2} )}{2h^3}\) |

| \(\frac{d^4f}{dx^4}\) | \(\frac{f (x_{i+4} ) − 4 f (x_{i+3} ) + 6 f (x_{i+2} ) − 4 f (x_{i+1} ) + f (x_{i} )}{h^4}\) | \(\frac{f (x_i ) − 4 f (x_{i−1} ) + 6 f (x_{i−2} ) − 4 f (x_{i−3} ) + f (x_{i−4} )}{h^4}\) | \(\frac{f (x_{i+2} ) − 4 f (x_{i+1} ) + 6 f (x_{i} ) − 4 f (x_{i−1} ) + f (x_{i−2} )}{h^4}\) |

What You’ve Learned#

How to approximate error in approximate derivatives

How to approximate derivatives using forward difference methods

How to approximate derivatives using backward difference methods

How to approximate derivatives using central difference methods

How to approximate higher order derivatives with forward, backward, and central differences